The Q-Sensei Logs Kafka Connector connects Q-Sensei Logs with your Apache Kafka Cluster and Confluent's Schema Registry. This tutorial shows how to configure the connector and start the connector service.

Prerequisites

Supported Kafka and Confluent versions

- Confluent Platform version >= 3.3.x

- Release date – August 2017

- Apache Kafka version >= 0.11.0.x

- Release date – June 2017

For older versions please contact us.

Supported Serialization Formats

- Avro

- JSON

System Requirements

For the Q-Sensei Logs Kafka Connector we recommend the following configurations:

| Component | Guidelines |

|---|---|

| Docker | Docker Engine version 19.03.4 |

| docker-compose | docker-compose version 1.24.1 |

| Operating System | Ubuntu, Linux (AWS, RHEL) |

| Available RAM | Minimum 4 GB; Recommended 8 GB |

| Available Disk Space | 10 to 12 GB for docker logs |

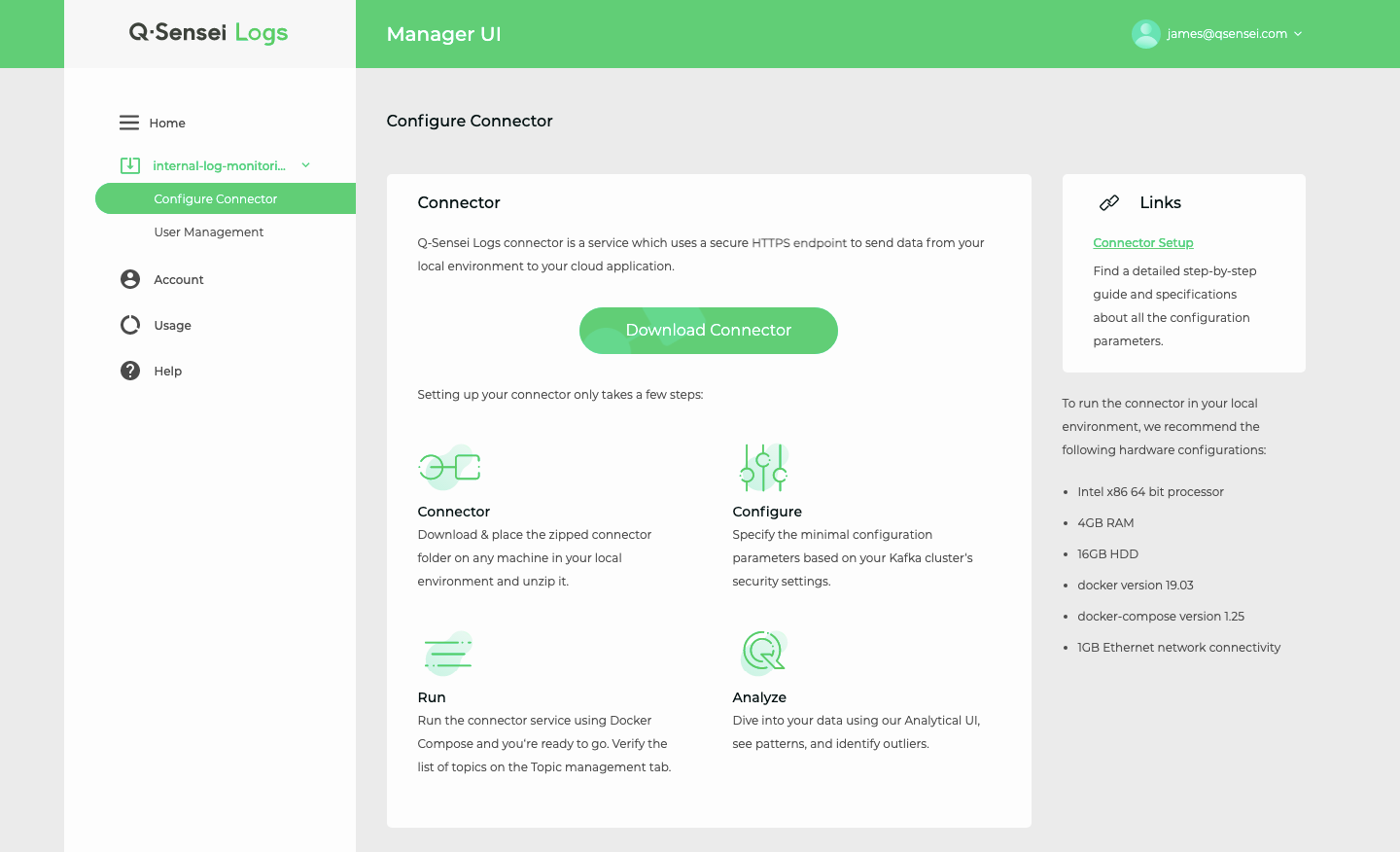

Step 1: Download Connector from Manager UI

Each connector belongs to a specific deployment. To download the connector, select the deployment in your Q-Sensei Logs account and click on the Configure Connector link in the left navigation menu.

Click on the button Download Connector to download the the connector package to your local system. The connector package will have the following naming convention:

qsensei_logs_connector_<YYYY-MM-DDTHH-MM-SS>.zip

Step 2: Unzip Connector Package

Place the connector package on a machine in your local environment. The machine needs to have network connectivity to the Kafka Cluster. Create a directory on the machine and move the zipped folder to that directory using the following commands:

$ mkdir kafka-connector

$ mv qsensei_logs_connector_2020-09-04T22-44-25.zip kafka_connector/

$ cd kafka-connector

Unzip the folder:

$ unzip qsensei_logs_connector_2020-09-04T22-44-25.zip

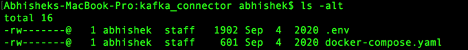

List all files:

ls -alt

You should see the following 2 files

Kafka Secrets directory

Create a directory on the machine where the connector package is installed. We will refer to this directory location as the Kafka secrets directory:

$ mkdir -p /home/qsensei/secrets

Step 3: Basic Configuration

- All configuration parameters are specified in the .env file which is included in the zipped folder that you have downloaded from the Manager UI.

- Consumer configuration parameters are prefixed with CONSUMER_

- Schema Registry configuration parameters are prefixed with SCHEMA_REGISTRY_

- In the docker container all secrets are expected to be at the location /etc/kafka/secrets

- /home/qsensei/secrets is mapped as a docker volume to /etc/kafka/secrets

Within the .env file all configuration parameters can be specified. To make sure that the connector runs correctly, it is important that some important configuration parameters are set.

Open the .env file in an editor of your choice. Depending on your Kafka cluster’s security protocol you are required to specify some parameters as color coded below.

| Color Code | Meaning |

|---|---|

| Green | Required minimal parameters. Ignore the rest if your security protocol is PLAINTEXT |

| Blue | Required, if your Kafka cluster’s security protocol is any one of GSSAPI, SSL, SASL or SASL_SSL |

| Purple | Required, if you have Avro messages in your Kafka cluster. |

| Yellow | Optional parameters you can specify to control Topics ingested in a deployment. The Topics can be transparently distributed across multiple deployments, if you operate large Kafka clusters. |

You can ignore all other parameters, which are not color coded.

CONSUMER_DEBUG=consumer,cgrp,broker

CONSUMER_BOOTSTRAP_SERVERS=logskafka.qsensei.com:19094,

logskafka.qsensei.com:29094, logskafka.qsensei.com:39094

CONSUMER_SECURITY_PROTOCOL=SASL_SSL

CONSUMER_TOPIC=

CONSUMER_TOPIC_BLACKLIST=docker-connect-status,_schemas,docker-connect-offsets,docker-connect-configs,__consumer_offsets,__schemas,clickstream_users

CONSUMER_MAX_POLL_INTERVAL_MS=86400000

CONSUMER_SESSION_TIMEOUT_MS=60000

CONSUMER_ENABLE_AUTO_COMMIT=False

CONSUMER_ENABLE_SPARSE_CONNECTIONS=False

CONSUMER_AUTO_OFFSET_RESET=earliest

CONSUMER_ENABLE_AUTO_OFFSET_STORE=False

CONSUMER_API_VERSION_REQUEST=True

CONSUMER_SASL_MECHANISMS=GSSAPI

CONSUMER_SASL_KERBEROS_SERVICE_NAME=kafka

CONSUMER_SASL_KERBEROS_KEYTAB=/etc/kafka/secrets/saslconsumer.keytab

CONSUMER_SASL_KERBEROS_PRINCIPAL=saslconsumer/logskafka.qsensei.com

@LOGSKAFKA. QSENSEI.COM

CONSUMER_SASL_USERNAME=

CONSUMER_SASL_PASSWORD=

CONSUMER_SSL_CA_LOCATION=/etc/kafka/secrets/snakeoil-ca-1.crt

CONSUMER_SSL_CERTIFICATE_LOCATION=/etc/kafka/secrets/kafkacat-ca1-signed.pem

CONSUMER_SSL_KEY_LOCATION=/etc/kafka/secrets/kafkacat.client.key

CONSUMER_SSL_KEY_PASSWORD=confluent

KRB5_CONFIG=/etc/kafka/secrets/krb.conf

KAFKA_SECRETS_DIR=/home/qsensei/secrets

EVENTS_CONSUMER_GROUP_ID=qsensei_events_consumer_grp

SCHEMA_CONSUMER_GROUP_ID=qsensei_schema_consumer_grp

SCHEMA_NUMBER_OF_MESSAGES=10

SCHEMA_REFRESH_INTERVAL_SECONDS=300

SCHEMA_REGISTRY_URL=http://logskafka.qsensei.com:8081

SCHEMA_REGISTRY_SSL_CA_LOCATION=

SCHEMA_REGISTRY_SSL_CERTIFICATE_LOCATION=

SCHEMA_REGISTRY_SSL_KEY_LOCATION=

UPLOAD_API_URL=${UPLOAD_API_URL}

UPLOAD_API_KEY=${UPLOAD_API_KEY}

DEPLOYMENT_ID=5

DELAY=3

INDEXING_DELAY=10

BATCH_SIZE=1000

The naming conventions that we adopt for specifying these parameters are standard Kafka-defined parameters and also documented on Confluent’s website. https://docs.confluent.io/current/installation/configuration/consumer-configs.html

The configuration file on your system will look like below:

For your Q-Sensei Logs deployment a minimum of configuration parameters are required.

Consumer Topics

In your .env file update the key

CONSUMER_TOPIC

and provide a comma separated list of Topic names the deployment should consume from. Leave this field blank if you want to consume from all topics.

CONSUMER_TOPIC = topic-name1,topic-name2,topic-name3

Consumer Topic Blacklist

In your .env file update the key

CONSUMER_TOPIC_BLACKLIST

and provide a comma separated list of Topic names for the blacklist.

CONSUMER_TOPIC_BLACKLIST = docker-connect-status,_schemas,docker-connect-offsets,docker-connect-configs,__consumer_offsets,__schemas

Note

For the connector setup there are some Kafka topics that are blacklisted by default. Please do not delete any of these topics. You can add additional topics to that list.

Step 4: Security Protocol

Depending on the security protocol that your Kafka cluster enforces, you have to add a valid value in the configuration file. The following values are supported:

| Value | Meaning | |

|---|---|---|

| PLAINTEXT | No authentication and no encryption supported | |

| SSL | Encryption supported, but no authentication | |

| SASL_PLAINTEXT | Authentication supported, but no encryption | |

| SASL_SSL (GSSAPI) | Both, authentication and encryption supported | |

| SASL_SSL (SCRAM) | Both, authentication and encryption supported |

In your .env file update the key

CONSUMER_SECURITY_PROTOCOL

and provide the security protocol that your Kafka Cluster enforces.

CONSUMER_SECURITY_PROTOCOL = SASL_SSL

Note

Depending on the value of CONSUMER_SECURITY_PROTOCOL you have to make additional configurations.

Security Configurations

For the security value PLAINTEXT please update the following configuration parameters:

| Key | Value | Example |

|---|---|---|

| CONSUMER_SECURITY_PROTOCOL | PLAINTEXT | Test |

| CONSUMER_BOOTSTRAP_SERVERS | Comma separated list of Kafka broker URLs | logskafka.qsensei.com:19094, logskafka.qsensei.com:29094, logskafka.qsensei.com:39094 |

The Kafka secrets directory /home/qsensei/secrets will be empty for this security setting.

For the security value SSL you have to configure the following values.

| Key | Value | Example |

|---|---|---|

| CONSUMER_SECURITY_PROTOCOL | SSL | |

| CONSUMER_BOOTSTRAP_SERVERS | Comma separated list of Kafka broker URLs | logskafka.qsensei.com:19094, logskafka.qsensei.com:29094, logskafka.qsensei.com:39094 |

| CONSUMER_SSL_CA_LOCATION | File or directory path to CA certificate(s) for verifying the broker's key | /etc/kafka/secrets/snakeoil-ca-1.crt |

| CONSUMER_SSL_CERTIFICATE_LOCATION | Path to client's public key (PEM) used for authentication. | /etc/kafka/secrets/kafkacat-ca1-signed.pem |

| CONSUMER_SSL_KEY_LOCATION | Path to client's private key (PEM) used for authentication. |

/etc/kafka/secrets/kafkacat. client.key |

| CONSUMER_SSL_KEY_PASSWORD | Private key passphrase | confluent |

The Kafka secrets directory /home/qsensei/secrets will have the following files.

- CA Certificate(s)

- Client’s public key

- Client’s private key

Note

In the .env file, do not change the path prefix /etc/kafka/secrets as all secrets are expected at /etc/kafka/secrets. Only specify the names as they appear in /home/qsensei/secrets.

For the security value SASL_PLAINTEXT you have to configure the following parameters:

| Key | Value | Example |

|---|---|---|

| CONSUMER_SECURITY_PROTOCOL | SASL_PLAINTEXT | |

| CONSUMER_BOOTSTRAP_SERVERS | Comma separated list of Kafka broker URLs | logskafka.qsensei.com:19094, logskafka.qsensei.com:29094, logskafka.qsensei.com:39094 |

| CONSUMER_SASL_MECHANISMS | Value should be one of PLAIN SCRAM-SHA-256, SCRAM-SHA-512 | |

| CONSUMER_SASL_USERNAME | SASL username | |

| CONSUMER_SASL_PASSWORD | SASL password |

The Kafka secrets directory /home/qsensei/secrets will be empty for this security setting.

| Key | Value | Example |

|---|---|---|

| CONSUMER_SECURITY_PROTOCOL | SASL_SSL | |

| CONSUMER_BOOTSTRAP_SERVERS | Comma separated list of Kafka broker URLs | logskafka.qsensei.com:19094, logskafka.qsensei.com:29094, logskafka.qsensei.com:39094 |

| CONSUMER_SASL_MECHANISMS |

Value should be one of SCRAM-SHA-256, SCRAM-SHA-512 |

|

| CONSUMER_SSL_CA_LOCATION |

File or directory path to CA certificate(s) for verifying the broker's key |

/etc/kafka/secrets/snakeoil-ca-1. crt |

|

CONSUMER_SSL_CERTIFICATE_ |

Path to client's public key (PEM) used for authentication. | /etc/kafka/secrets/kafkacat-ca1- signed.pem |

| CONSUMER_SSL_KEY_LOCATION | Path to client's private key (PEM) used for authentication. | /etc/kafka/secrets/kafkacat.client. key |

| CONSUMER_SSL_KEY_PASSWORD | Private key passphrase | confluent |

| CONSUMER_SASL_USERNAME |

SASL username |

|

|

CONSUMER_SASL_PASSWORD |

SASL password |

The Kafka secrets directory /home/qsensei/secrets will have the following files.

- CA Certificate(s)

- Client’s public key

- Client’s private key

For the security value SASL_SSL (GSSAPI) please update the following parameters:

| Key | Value | Example |

|---|---|---|

| CONSUMER_SECURITY_PROTOCOL | SASL_SSL | |

| CONSUMER_BOOTSTRAP_SERVERS | Comma separated list of Kafka broker URLs | logskafka.qsensei.com:19094, logskafka.qsensei.com:29094, logskafka.qsensei.com:39094 |

| CONSUMER_SASL_MECHANISMS | GSSAPI | /etc/kafka/secrets/snakeoil- ca-1. crt |

| CONSUMER_SASL_KERBEROS_ SERVICE_NAME |

Kerberos principal name that Kafka runs as, not including /hostname@REALM | kafka |

| CONSUMER_SASL_KERBEROS_ KEYTAB |

Path to Kerberos keytab file. | /etc/kafka/secrets/ saslconsumer. keytab |

| CONSUMER_SASL_KERBEROS_ PRINCIPAL |

Kafka client's Kerberos principal name. | saslconsumer/logskafka. qsensei.com @LOGSKAFKA.QSENSEI.COM |

| CONSUMER_SSL_CA_LOCATION | File or directory path to CA certificate(s) for verifying the broker's key | /etc/kafka/secrets/snakeoil- ca-1. crt |

| CONSUMER_SSL_CERTIFICATE_ LOCATION |

Path to client's public key (PEM) used for authentication. | /etc/kafka/secrets/kafkacat- ca1- signed.pem |

| CONSUMER_SSL_KEY_LOCATION | Path to client's private key (PEM) used for authentication. | /etc/kafka/secrets/kafkacat. client. key |

| CONSUMER_SSL_KEY_PASSWORD | Private key passphrase | confluent |

The Kafka secrets directory /home/qsensei/secrets will have the following files.

- CA Certificate(s)

- Client’s public key

- Client’s private key

- krb.conf

Note

In the .env file, do not change the path prefix /etc/kafka/secrets as all secrets are expected at /etc/kafka/secrets. Only specify the names as they appear in /home/qsensei/secrets.

Step 5: Configure Connector for Avro Schema Registry

If the messages in your Kafka Cluster are Avro encoded, you have to configure the following parameters.

| Key | Value | Example |

|---|---|---|

|

SCHEMA_REGISTRY_URL |

Schema Registry URL. |

http://logskafka.qsensei.com:8081 |

|

SCHEMA_REGISTRY_BASIC_AUTH_ |

Must be one of URL |

|

|

SCHEMA_REGISTRY_SSL_CA_LOCATION |

Path to CA certificate file used to verify the Schema Registry's private key. |

|

|

SCHEMA_REGISTRY_SSL_CERTIFICATE_ |

Path to client's public key (PEM) used for authentication. |

|

| SCHEMA_REGISTRY_SSL_KEY_LOCATION |

Path to client's private key (PEM) used for authentication. |

|

|

SCHEMA_REGISTRY_BASIC_AUTH_ |

Client HTTP credentials in the form of "username:password" |

The Kafka secrets directory /home/qsensei/secrets will have the following files.

- CA Certificate(s)

- Client’s public key

- Client’s private key

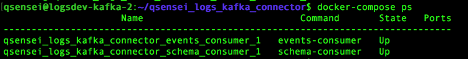

Step 6: Run the Connector

Start the connector service by running the following docker commands:

docker-compose up -d

Verify the services are up

docker-compose ps

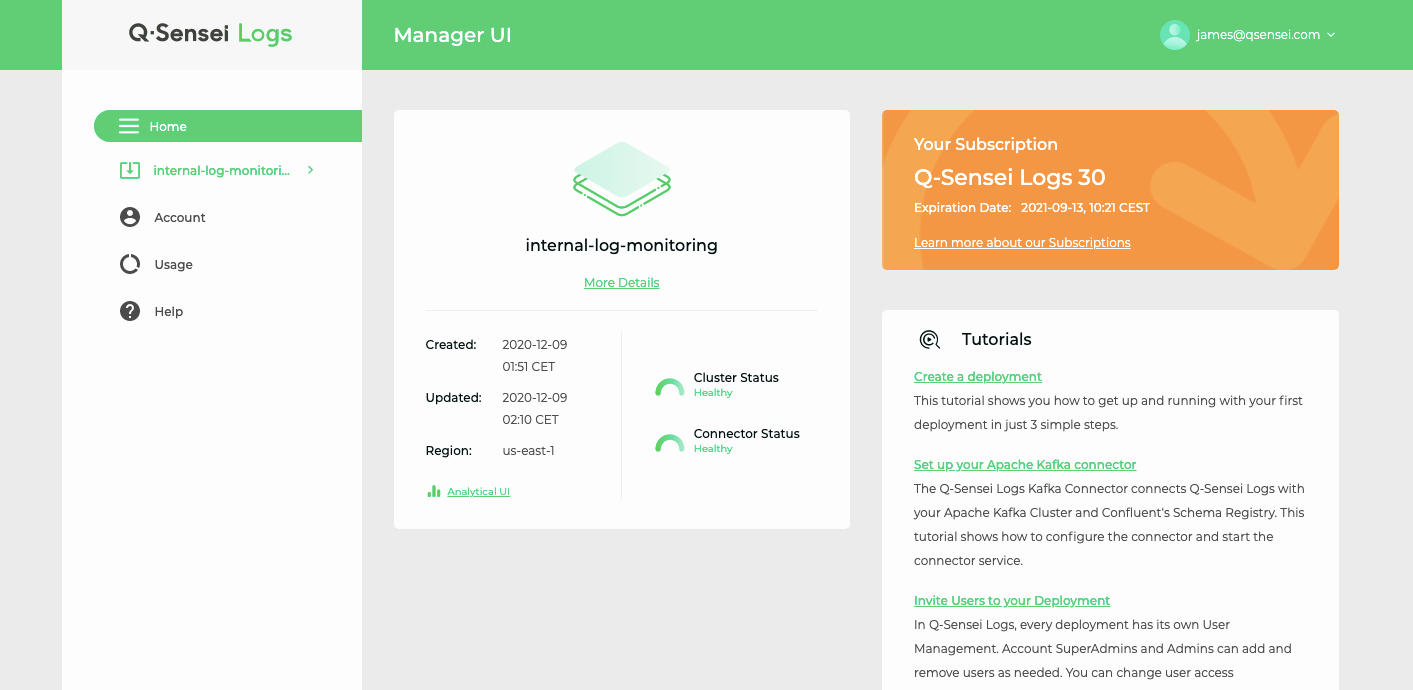

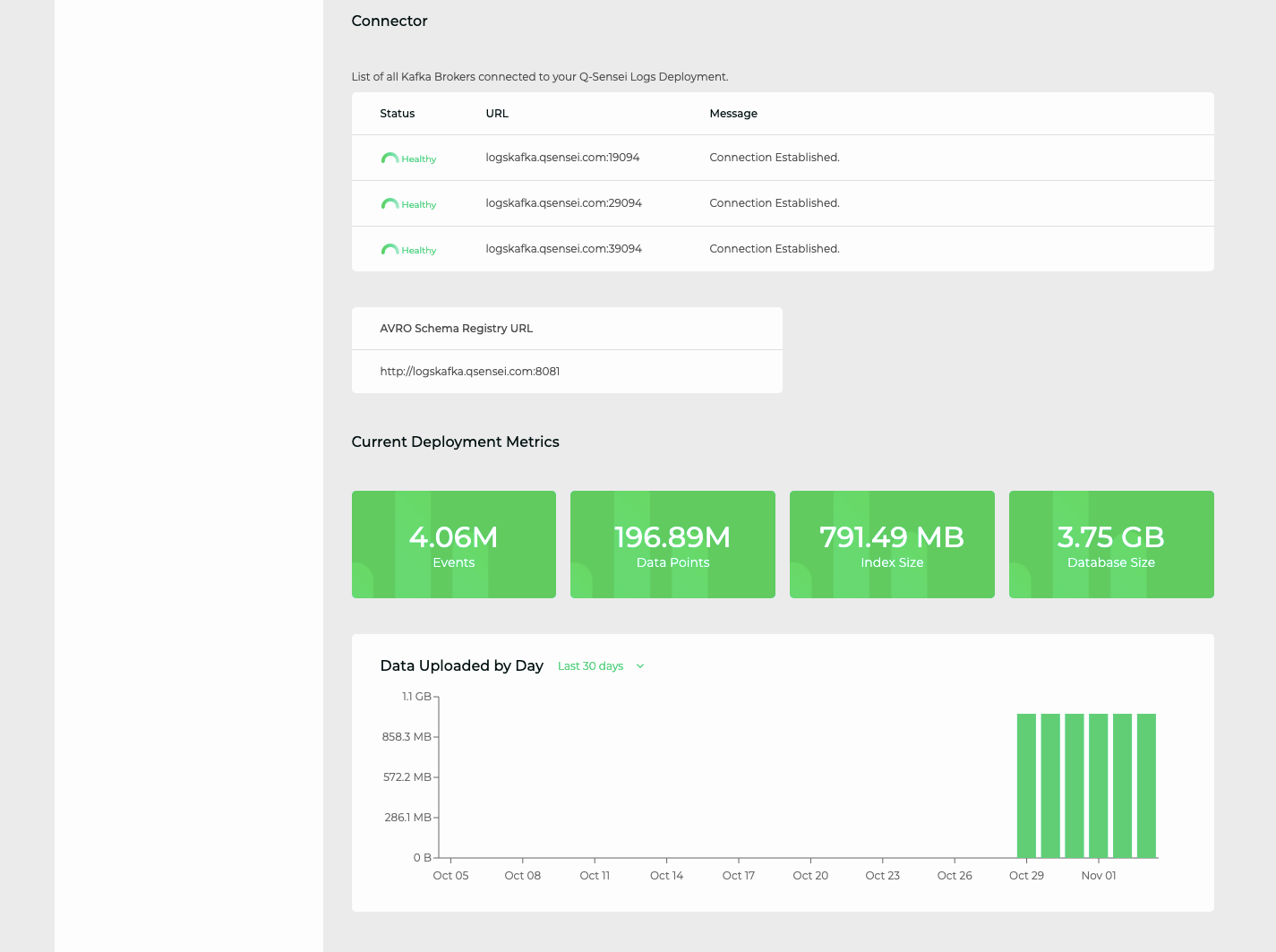

Once the connector service is up, it reports the status information on the Manager UI.

If the connector status is healthy, events will be ingested.

The status of configured brokers, schema registry, and usage is reported in the Manager UI.